The Moderation article

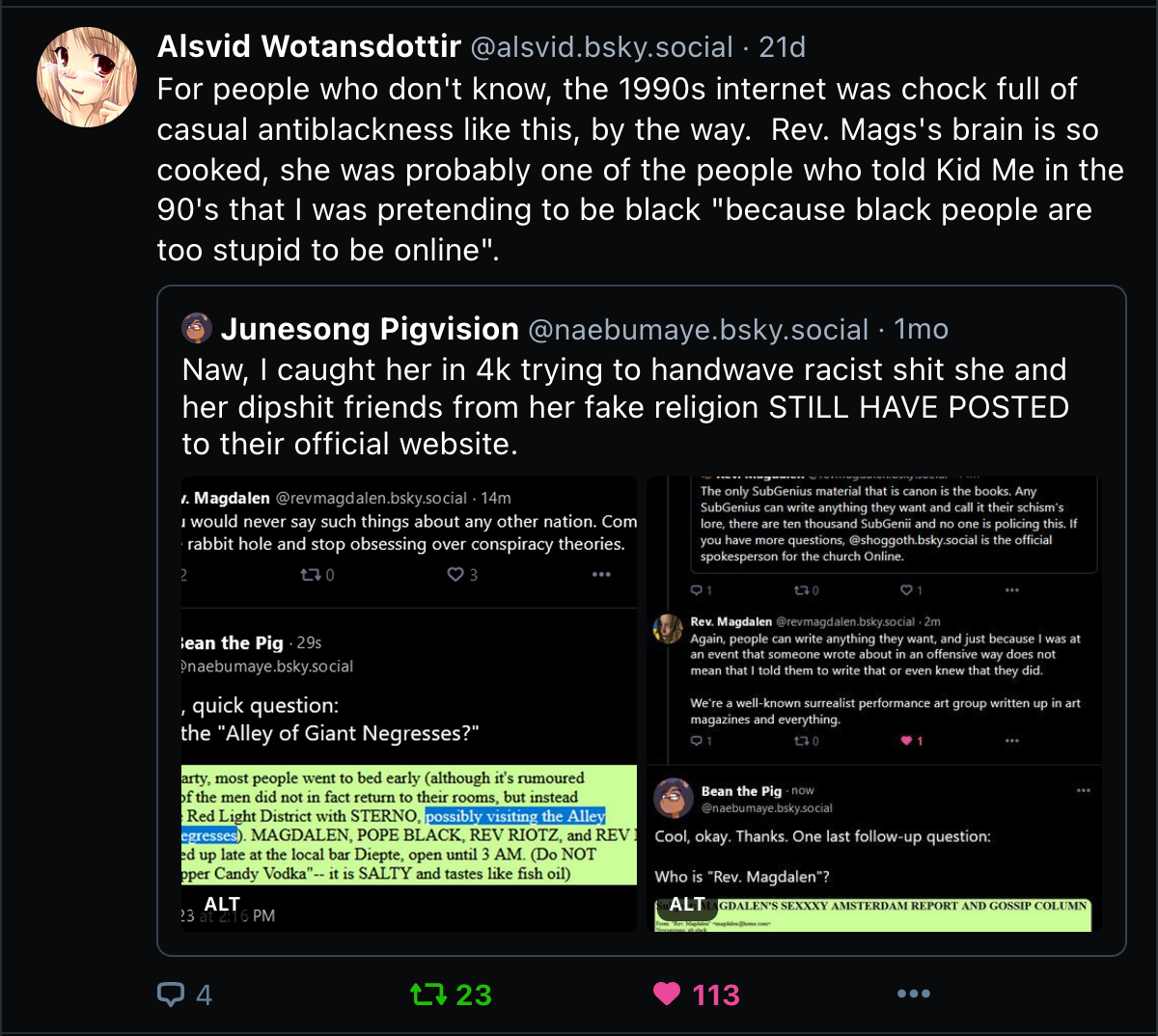

From the very beginning, the internet was antiblack.

The early 1990s Internet had become a cafeteria, filled with the text-based musings and rantings produced by the generations of Usenet and BBS users from which the Internet evolved. It had become a place where you could find and sample expressions on just about everything you wanted. Porn. Politics. Suicide. Aliens. African Americans. Anarchists plotting world domination. And from day one, this environment was hostile to black people.

-Charlton D. McIlwain (Black Software, 2020, p. 130)

An article about Bluesky App's moderation issues is the first result that comes up when you Google search "fediverse antiblackness"; which is incredibly funny for a place that isn't part of the fediverse (it's the internected ATmosphere, duh) and also refuses to acknowledge issues with antiblackness. This post isn't about the moderation issues Bluesky has faced. I've written about that before. This post is to organize all of my thoughts on AT Protocol's composable moderation features, moderation in general on decentralized social media and how I plan to approach things for Blacksky.

I am not an expert on trust & safety. I am not a Blackademic. I have however been Black my whole life and on the internet for 85% of it. I also have a high degree of agency in this because of Bluesky's decentralized, open source nature and an equal amount of urgency having put a lot of work into Blacksky The Feed. So, whether the non-Black trust & safety expert gatekeepers like it or not, I have something to say on the matter.

Note: Some of the features I describe here are still under active development and may change. But I wanted to give some insight into AT Protocol's design and how it fits into my own political frameworks.

Also Note: This article is for nerds and the otherwise curious.

Overhead Projectors & Composing Moderation

The composable in composable moderation refers to a hodge podge of moderation features that can be mostly self-selected by each individual user to form the experience they see in-app. What's tricky about Bluesky PBC (and I think it's undersold itself here) is that its approach to moderation is neither fully centralized nor fully decentralized. It is neither solely dependent on a Bluesky App moderation team nor solely dependent on a volunteer labor force of moderators.

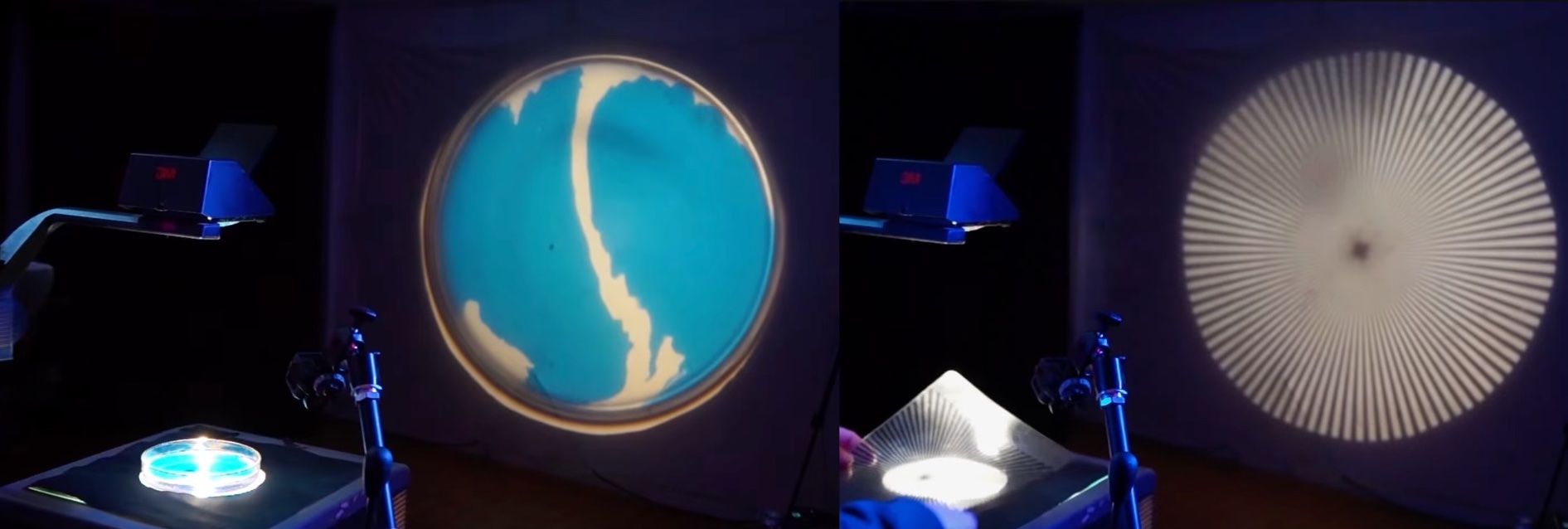

I personally think of composable moderation as a stacking of moderation authorities. Each authority has their own part to play and each are voluntarily selected by users to define their experience. To me, it's a lot like the overhead projectors we had in elementary school. You can overlay different transparent sheets or objects to create new compositions. In this analogy, the Bluesky App View is the projector and the canvas is the client you view it through. From there you could layer a non-transparent black piece of paper with a circle cut out creating a perimeter. That's like a custom feed – curation as a form of moderation. Then you could place in that circle a cup of water - a mute/blocklist. Then you could pour blue liquid oil dye - a labeler service subscription.

The final image projected on the canvas is your unique user experience and you're free to swap out any of those overlay pieces you like except the projector and canvas as you will always need those to view any composition. That last part's important but I'll come back to it.

I've never seen anyone lay it all out, so here are all the players who ultimately serve to craft the experience of users:

- Clients: Client applications like the official web and iOS apps, colloquially referred to as "this [website/app]" are the face of Bluesky. People think the Bluesky app they downloaded and logged into is the whole app or the one true app. It is not and there are several clients. Some of which are already ignoring users' privacy preferences.

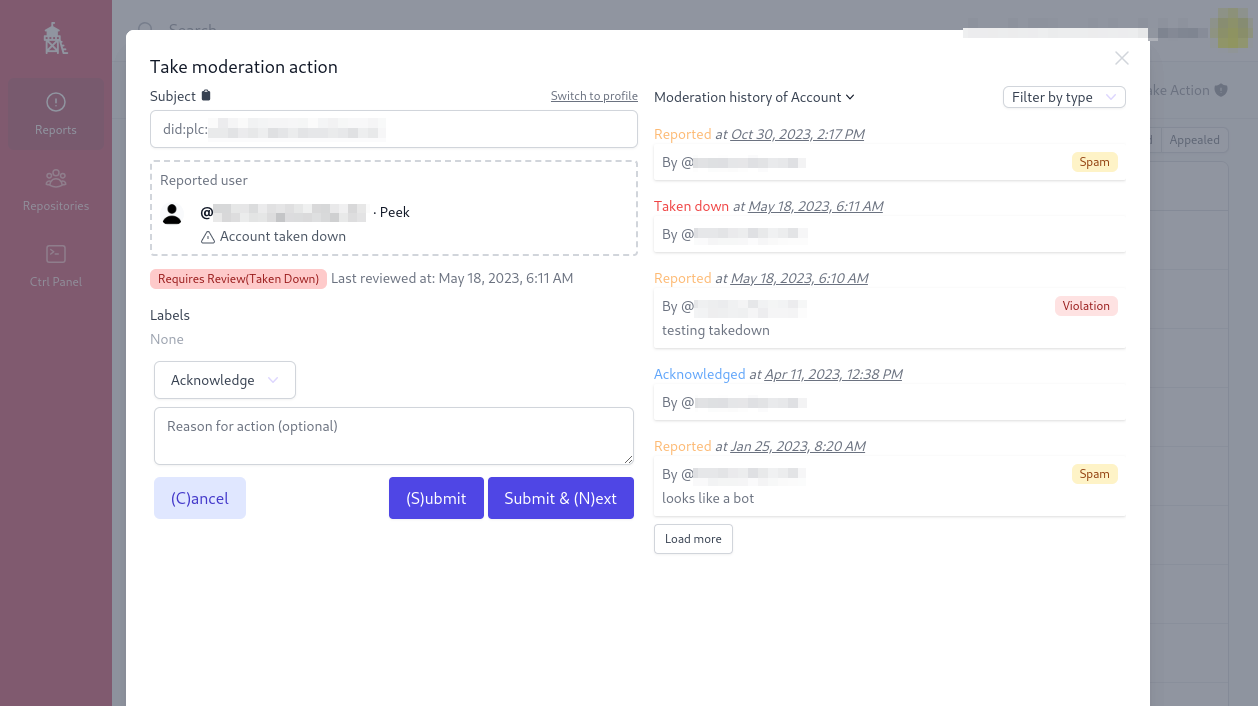

- App View: From Bluesky PBC's perspective, when they refer to "the app" they're talking about the App View plus the official Bluesky client. The App View takes in all the data sent from a relay and indexes it. This makes it as much if not more expensive to run than a relay (Bluesky set up some custom hardware/on-prem infrastructure to handle this). App views have a lot of moderation power! When you make a post it goes from your PDS -> Relay(s) -> the app view. This is where a social media company's centralized moderation happens. When you report a post or account and it gets taken down -- it is taken down from the App View. There will probably only ever be one Bluesky app view, but if someone makes "TikTok for AT Protocol" - that will have its own app view.

- Personal Data Servers (PDSs): A PDS is like your home server. The person controlling your PDS has a high degree of control over your data. A PDSs job is to hold your data and share it with the rest of the network. Bluesky used to only have one PDS at bsky.social but then splintered off as the network gained millions of users and as a test for federation. Now there are a few, all named after mushrooms I gather. My account is currently on https://shiitake.us-east.host.bsky.network

PDS admins are also responsible for removing spammy and illegal content from their servers. - Relays: Formerly known as Big Graph Services (BGSs), they relay or broadcast information through the network. Relays are how all of your likes, follows, and posts make their way over to people building custom feeds. As the backbone of the network you should think of them like internet service providers. Because they're expensive to run, they'll probably mostly be operated by corporations or governments. Relay operators play a big part in who gets to be included in the network. That said, these wouldn't be the folks who would defederate a "NaziSky" PDS instance. If they do defederate someone at this level, it'd probably be for reasons like "being US based and not allowing traffic from Iran" for example.

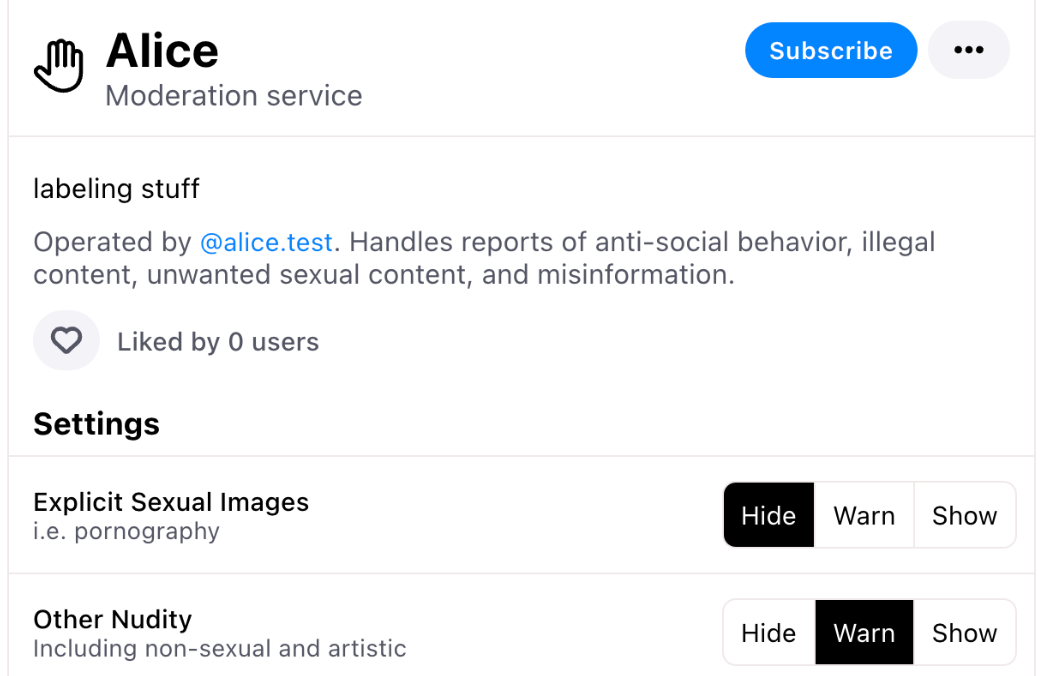

- Moderation Services (aka Labelers): These stack on top of app views and help to provide content warnings to users. They can't remove content from anywhere but instead help manually or automatically apply labels to posts, users, and even other labeler services. When moderation services go live, you'll be able to run code that automatically labels content as well as use tools like Ozone to manually apply labels. This is community moderation. It doesn't replace centralized moderation but will hopefully break down barriers to allow devs like myself to participate in creating a safer space online. Subscribing to a moderation service is private, even to the operator.

- Custom Feeds (aka feedgens): Custom feeds provide moderation by curation in that if you only ever look at one custom feed (like Blacksky, or Gardening) you never see anything outside of that anyway. And the operator of that feed can effectively shadowban someone by removing the individual or their content from a feed. Feed operators can see who's viewing their feed which allows them to tailor it (e.g. For You)

- User Lists: Formerly just mutelists, you can now create generic lists of users. People will also have the option to click a button and mute or block everyone on the list. Blocks on AT Protocol are public, mutes are not.

The key takeaways here are:

- There's no equivalent to Mastodon's defederation in AT Protocol. Instead, various different players in the stack have the power to silence or ignore a user or set of users.

- It's not either/or between community and centralized moderation on Bluesky, it's both/and. Like in my projector example, Bluesky is the projector itself. You're stuck with Bluesky and their in-house moderation policies. Ipsofacto, centralized moderation exists on Bluesky.

Software as Resistance

A question/concern that I seem to share with the Bluesky team (at least with Paul) and that I've been thinking about a lot lately is:

how much engineering resources and money would it take to give a group of users significant control of their collective experience without needing to be separate from a broader social network.

For an app like Twitter/X, I think if you really wanted to push for and protect the organizing potential of a particular in-group, your only real option is to buy the app for $44B. Otherwise, you're essentially powerless and would probably have to cease organizing and find alternatives.

Bluesky's vision for the future is at least aiming for a world where communities can own different parts of the stack without needing to be isolated. So for me, the next question is "what would it really take to get there?" For Blacksky, I think it means at least owning a custom feed, block/mutelist, moderation service, PDS, and client. I can't build it all myself, and as others have pointed out I'm not a trust & safety expert, nor am I rolling in Y Combinator money but -- I'd like to give it a shot 😃.

I take a very emergent approach with everything I build, but some non-obvious things I've thought about doing both in and outside of the ATP stack are:

- Already doing this but because as a feed operator you can see who's viewing your feed, creating a blocklist to stop those users from community abuse.

- Preventing a PDS from being crawled by a Relay by setting an allowlist at the network level. PDSs on ATP will be generic so even when there's a TikTok clone, a Reddit clone, etc. there will always be just one Blacksky PDS.

- Partner with Open Measures to identify terms and language used by hategroups to build up a set of users and posts to flag with a moderation service.

- In the event we find ourselves in a "every Black person is a Jorbiter" 2.0 situation but with labeling (I'm already on some mute lists) then we'll also be able to label that labeler.

From the very beginning, the internet was antiblack. From BBSs to MW2 player lobbies. But we have also always built and organized online! Blacksky follows in the footsteps of Afronet, the Universal Black Pages, NetNoir, BlackPlanet.com, and more! This doesn't mean letting Bluesky PBC off the hook. I think the first step to addressing antiblackness is to be able to call it by name. But in the meantime, we can build.